Can visual and auditory training generalize to novel conditions?

Vision and hearing can get more sensitive with behavior training. An optimal training outcome should be able to generalize to other untrained conditions. Why is training not always optimal? How can we enhance the generalization of training both within and across modalities? My work in visual, auditory, and crossmodality learning reveals the important role of top-down attention and bottom-up stimulation in the generalization of learning outcome.

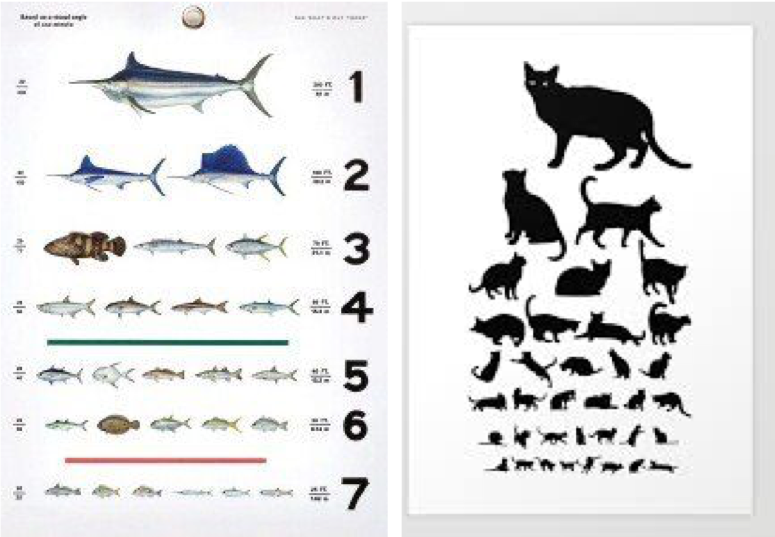

How to read better with low vision?

Low vision cannot be corrected by conventional eyeglasses and causes difficulties in many daily activities. Difficulty in reading is one of the primary complaints of individuals with low vision. For each individual with low vision, can we customize an optimal reading configuration for them to make their reading more efficient? My work investigates the impact of font, print size, display format and other factors to come up with a recipe for optimal reading.

Localization, navigation and spatial learning with impaired vision and/or hearing

Vision and hearing are the most important senses for localizing the direction and distance of objects around us, for safely navigating familiar and unfamiliar environments, and for forming an internal map of the learnt space layout. How do different types of vision and/or hearing loss affect such abilities? Is the laboratory finding of “optimal sensory integration” true in real life? Is it possible to train such optimal integration in different real life environments? I am conducting a series of studies to answer these questions.

Interaction between vision and hearing in speech perception.

Vision plays a complementary role for speech perception. When we are in a big party where there are many people talking at the same time, our vision helps us localize the person we are interested in. When the background is loud, observing our friend’s lip and head movements help us understand their speech better. To what extent do people with vision impairment utilize their residual vision to aid speech perception?